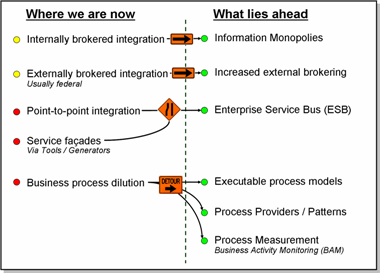

I’ve been kicking around some ideas in this area for a while, in hope of bringing them together in some coherent fashion. The image below represents the fruits of my labors. I’m not sure that it’s prefect or that I won’t look back on this as a sophomoric effort several months from now. The visual does, however, touch on several major observations I’ve made recently and allow me to illustrate them in a fairly clear and succinct fashion. Some of the terms are heavily overloaded and thus a bit more discussion of each of these trends is provided below for clarification.

Information Monopolies – Several states have internal brokering setups in place. These information brokerages are found most frequently in the areas of law and justice, financial transactions, and health information. I believe these areas represent the first of several information monopolies. These monopolies were driven by federal data exchange efforts in homeland security and bioterrorism and, in the case of financial data exchanges, system consolidations on common ERP platforms. Expect to see more of these natural information monopolies in the near future with efforts such as Real ID, the National Information Exchange Model (NIEM), Medicaid spend management, and the National Provider Identifier (NPI) taking hold. As these monopolies take shape, certain organizations within state government will be the logical choice as keepers of this data. These keepers should be building their systems in a service-oriented fashion to facilitate easy exchange of information amongst all systems requiring access to this data.

Increased External Brokering – Data exchanges with external business partners are a critical source of information for many state government systems. Many of these exchanges still function via standard mainframe flat file transfers that occur at predefined intervals. The federal government is working to transform their data exchanges to use service-based XML file transfers. Interestingly enough, the private sector is lagging behind a bit in this area. I recall a recent experience where we were notified that the 3 major credit bureaus had “upgraded” their data exchanges from automated flat file transfers to HTTP POST transfers invoked manually through Web pages (still using the same flat files). I would expect to see an increased push from both the public and private sectors to move towards HTTP SOAP-based Web services over the next several years.

Enterprise Service Bus (ESB) – The two most common patterns of Web service automation that I’ve witnessed in state government are service-based point-to-point integration and service façades. The point-to-point services represent a simplistic upgrade of existing point-to-point mainframe file transfers to use more current technologies. The service façades are usually the product of auto-generated Web service interfaces whose structure mimics that of the underlying components’.

Without going as far as to label these uses of services as anti-patterns, they are definitely crude uses of a Web service based infrastructure. Without publication in a registry or consideration of the consequences of how these services might be used outside of their intended scope, the service infrastructure can be considered anemic, at best. ESB promises to right some of these wrongs by acting as a central pipeline to enable access to these and other legacy services. Along with this access comes centrally provided infrastructure and transformation services that shield the service providers from some of the change and configuration management headaches associated with providing these services.

Robust Business Process Infrastructure – The current state of business process integration in most legacy enterprise applications is characterized by a complete commingling of business process logic, data processing logic, and other rudimentary services. In most systems, you’d be hard pressed to trace the progression of a business process from end-to-end, let alone measure the effectiveness of the process execution. Yet, business processes are the lingua franca of the business managers and business users. Show business people a UML sequence diagram and it’s likely to elicit blank stares. Show them a process flow, on the other hand, and you will probably receive all sorts of feedback on how to modify the flow to make it more representative or efficient.

It’s really a shame that it has taken the IT industry so long to come up with tools that meet this relatively elemental need of business application development (see article – State of Workflow). It does appear that these tools are finally beginning to materialize in the form of Business Process Execution Language (BPEL) compliant servers and tools. Support for BPEL is fairly widespread among the newest Java IDEs and application server vendors (Sun, Oracle, IBM, BEA, and JBoss). On the Microsoft side, Windows Workflow Foundation (WF) and Visual Studio support in the release of the .NET 3.0 Framework represents a major step forward towards standards-compliant support. BPEL will allow for the orchestration of services and the representation of this orchestration using common process flow diagrams that business analysts and business managers can use to effectively communicate process execution.

There are two other trends that are likely to follow the creation of BPEL-compliant processes. First is the introduction of Business Activity Monitoring (BAM) to measure the ongoing execution of the business processes and provide real time business intelligence. Second is some degree of standardization of business process models and potentially even the rise of business process outsourcing. In state government, this outsourcing is likely limited to the programmatic execution of the process as well as support and maintenance for ongoing process changes. Business processes that are codified in legislation (taxation and welfare eligibility, for example) are good candidates for automation and standardization of process commonalities.